Unprivileged LXC containers with OpenvSwitch

This is still a draft, last part on how to enable an unprivileged user to create a veth and connect it to OpenvSwitch is missing

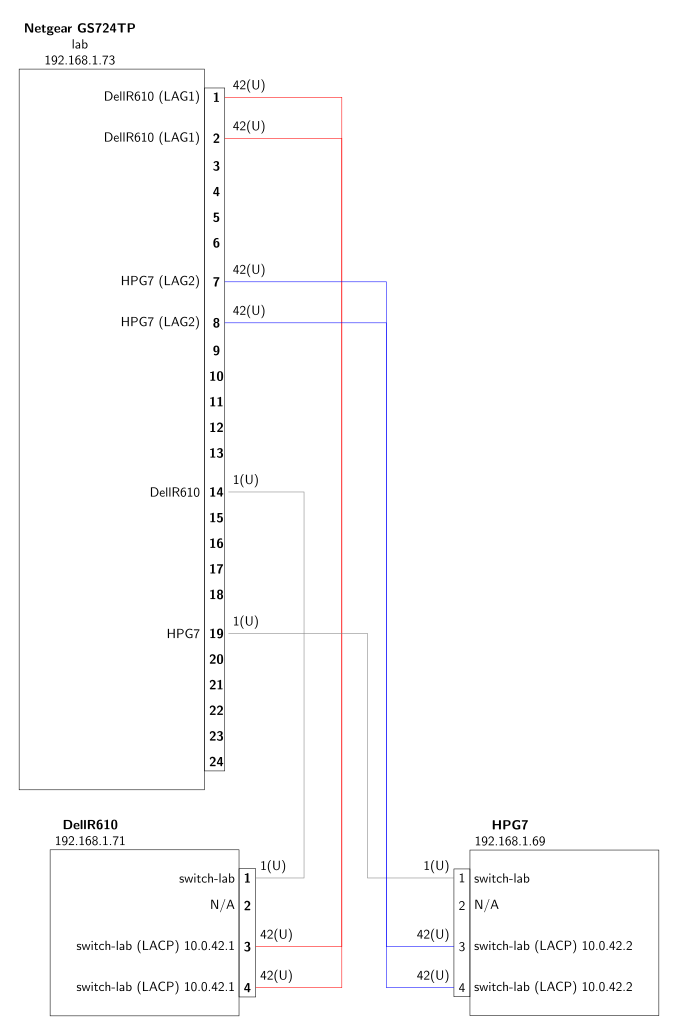

This article describes a full end-to-end lab configuration for running unprivileged (non-root) LXC containers on two different servers. Containers are connected to a dedicated VLAN available on both servers. The set-up relies on Open vSwitch to provide an overlay network between the 2 servers and use LACP on two ethernet links to enable failover on the VLAN.

The basic setup is two servers :

At time of writing, both run Debian 11 Bullseye with Linux kernel 5.4.0-4-amd64.

Network configuration relies on a Netgear GS724TP switch. This managed switch supports LACP and VLANs.

Each server has a first connection to the switch on one interface for the management network, i.e. connected via a gateway to the rest of the network. This is the interface used to connect to the server via ssh from my workstation.

A lab network is also set-up for the containers or VM on the two servers. For this, two interfaces on each server are set-up as a bond interface, those two interfaces are connected to the switch on a dedicated VLAN (42). For the bonding to work, a Link Aggregation Group (LAG) is configured on the switch for each server.

Bonding

Enabling bonding on Linux is very simple with the [*ifenslave*](https://packages.debian.org/bullseye/ifenslave) package on Debian.

$ sudo apt-get install ifenslave

$ sudo ifup bond0

Then the content of the /etc/network/interfaces.d/setup file for the Dell R610:

auto lo

iface lo inet loopback

auto eno1

iface eno1 inet dhcp

auto bond0

iface bond0 inet manual

slaves eno3 eno4

bond-mode active-backup

bond-miimon 100

bond-downdelay 200

bond-updelay 200

allow-bond0 eno3

iface eno3 inet manual

bond-master bond0

allow-bond0 eno4

iface eno4 inet manual

bond-master bond0

Then the content of the /etc/network/interfaces.d/setup file for the HP G7, identical except for the naming ot the interfaces:

auto lo

iface lo inet loopback

auto enp3s0f0

iface enp3s0f0 inet dhcp

auto bond0

iface bond0 inet manual

slaves enp4s0f0 enp4s0f1

bond-mode active-backup

bond-miimon 100

bond-downdelay 200

bond-updelay 200

allow-bond0 enp4s0f0

iface enp4s0f0 inet manual

bond-master bond0

allow-bond0 enp4s0f1

iface enp4s0f1 inet manual

bond-master bond0

At this stage the two servers can communicate with each other, and the rest of the network on their primary interface on the “public” LAN. To enable the “Lab” LAN we need to configure the bonded interfaces. This is done below with Open vSwitch.

OpenvSwitch

Open vSwitch is installed from the source. Release 2.12.0 was the latest at the time of writing. Compilation and installation are straightforward:

$ sudo ./boot.sh

$ sudo ./configure

$ sudo make -j24

$ sudo make install

Then start Open vSwitch:

$ sudo mkdir -p /usr/local/etc/openvswitch

$ sudo ovsdb-tool create /usr/local/etc/openvswitch/conf.db vswitchd/vswitch.ovsschema

$ sudo mkdir -p /usr/local/var/run/openvswitch

$ sudo /usr/local/sbin/ovsdb-server /usr/local/etc/openvswitch/conf.db --log-file=/tmp/ovsdb-server.log --remote=punix:/usr/local/var/run/openvswitch/db.sock --remote=db:Open_vSwitch,Open_vSwitch,manager_options --private-key=db:Open_vSwitch,SSL,private_key --certificate=db:Open_vSwitch,SSL,certificate --bootstrap-ca-cert=db:Open_vSwitch,SSL,ca_cert --pidfile --detach

$ sudo /usr/local/sbin/ovs-vswitchd --pidfile --detach

The commands above tell Open vSwitch to use the file /usr/local/etc/openvswitch/conf.db as the database, to listen on a unix socket /usr/local/var/run/openvswitch/db.sock and then start the Open vSwitch daemon.

We then create a bridge br42 and connect the bond0 interface to it:

$ sudo /usr/local/bin/ovs-vsctl add-br br42

$ sudo /usr/local/bin/ovs-vsctl add-port br42 bond0

and finally we start the bridge, this will give an IP adress to the bridge:

$ sudo ifup br42

Now the configuration looks like that:

# ip a

(...)

4: eno3: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq master bond0 state UP group default qlen 1000

link/ether 6e:98:69:a8:70:6b brd ff:ff:ff:ff:ff:ff

5: eno4: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq master bond0 state UP group default qlen 1000

link/ether 6e:98:69:a8:70:6b brd ff:ff:ff:ff:ff:ff

6: bond0: <BROADCAST,MULTICAST,MASTER,UP,LOWER_UP> mtu 1500 qdisc noqueue master ovs-system state UP group default qlen 1000

link/ether 6e:98:69:a8:70:6b brd ff:ff:ff:ff:ff:ff

inet6 fe80::6c98:69ff:fea8:706b/64 scope link

valid_lft forever preferred_lft forever

(...)

9: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 0e:c0:fe:c4:87:a2 brd ff:ff:ff:ff:ff:ff

10: br42: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 1a:b2:71:d0:53:42 brd ff:ff:ff:ff:ff:ff

inet 10.0.42.1/24 brd 10.0.42.255 scope global br42

valid_lft forever preferred_lft forever

inet6 fe80::18b2:71ff:fed0:5342/64 scope link

valid_lft forever preferred_lft forever

in this configuration only the bridge has an IP address. The bond0 interface does not have any, neither do the two interfaces (eno3 and eno4 here) that constitute the bond.

NAT

#!/bin/bash

IFACE_WAN=eno1

IFACE_LAN=br42

NETWORK_LAN=10.0.42.0/24

echo "1" > /proc/sys/net/ipv4/ip_forward

iptables -t nat -A POSTROUTING -o $IFACE_WAN -s $NETWORK_LAN ! -d $NETWORK_LAN -j MASQUERADE

iptables -A FORWARD -d $NETWORK_LAN -i $IFACE_WAN -o $IFACE_LAN -m state --state RELATED,ESTABLISHED -j ACCEPT

iptables -A FORWARD -s $NETWORK_LAN -i $IFACE_LAN -j ACCEPT

LXC

The first step is to configure LXC to use Open vSwitch as the network bridge.

Configure LXC, update the /etc/lxc/default.conf to look like that. Using Open vSwitch requires to declare script.up and script.down scripts :

$ cat /etc/lxc/default.conf

lxc.net.0.type = veth

lxc.net.0.flags = up

lxc.net.0.hwaddr = 00:16:3e:01:01:01

lxc.apparmor.profile = generated

lxc.apparmor.allow_nesting = 1

lxc.net.0.script.up = /etc/lxc/ovsup

lxc.net.0.script.down = /etc/lxc/ovsdown

Below the content of the two scripts to start and stop the connection to Open vSwitch:

$ cat /etc/lxc/ovsup

#!/bin/bash

BRIDGE="br42"

sudo /usr/local/bin/ovs-vsctl --may-exist add-br $BRIDGE

sudo /sbin/ifconfig $5 0.0.0.0 up

sudo /usr/local/bin/ovs-vsctl --if-exists del-port $BRIDGE $5

sudo /usr/local/bin/ovs-vsctl --may-exist add-port $BRIDGE $5

$ cat /etc/lxc/ovsdown

#!/bin/bash

BRIDGE="br42"

sudo /sbin/ifdown $5

sudo /usr/local/bin/ovs-vsctl del-port $BRIDGE $5

Next step is to enable unprivileged users to create containers.

Enable kernel.unprivileged_userns_clone in sysctl:

$ sudo sh -c 'echo "kernel.unprivileged_userns_clone=1" > /etc/sysctl.d/80-lxc-userns.conf'

$ sudo sysctl --system

Create an account for an unprivileged user lxcunpriv.

$ sudo useradd -s /sbin/bash -c 'unprivileged LXC user' -m lxcunpriv

It is then necessary to declare the namespaces for this user, create or modify the files below:

$ cat /etc/subuid

lxcunpriv:165536:65536

$ cat /etc/subgid

lxcunpriv:165536:65536

$ setfacl -m u:165536:x /home/lxcunpriv/ /home/lxcunpriv/.local /home/lxcunpriv/.local/share

$ cat /etc/lxc/lxc-usernet

lxcunpriv veth br42 10

Create a ~lxcunpriv/.config/lxc/default.conf file to declare the configuration of containers for the user:

$ cat ~lxcunpriv/.config/lxc/default.conf

lxc.include = /etc/lxc/default.conf

lxc.idmap = u 0 165536 65536

lxc.idmap = g 0 165536 65536

# "Secure" mounting

lxc.mount.auto = proc:mixed sys:ro cgroup:mixed

# Network configuration

lxc.net.0.type = veth

lxc.net.0.link = br42

lxc.net.0.flags = up

lxc.net.0.hwaddr = 00:FF:xx:xx:xx:xx

TODO

Some useful links on configurating unprivileged containers with LXC

- https://stgraber.org/2013/12/20/lxc-1-0-blog-post-series/

- https://linuxcontainers.org/lxc/manpages/

- https://linuxcontainers.org/lxc/getting-started/

- https://wiki.debian.org/LXC

- https://sdn-lab.com/2013/11/14/setting-up-openvswitch-2-0-mininet-2-1/

- https://blog.scottlowe.org/2014/01/23/automatically-connecting-lxc-to-open-vswitch/

- https://infologs.wordpress.com/2015/06/19/how-to-attach-lxc-container-to-ovs-openvswitch/

- https://www.thegeekdiary.com/how-to-set-external-network-for-containers-in-linux-containers-lxc/

- http://www.linux-admins.net/2013/04/connecting-kvm-or-lxc-to-open-vswitch.html

- https://ilearnedhowto.wordpress.com/2016/09/16/how-to-create-a-overlay-network-using-open-vswitch-in-order-to-connect-lxc-containers/

- https://ilearnedhowto.wordpress.com/2016/09/21/how-to-connect-complex-networking-infrastructures-with-open-vswitch-and-lxc-containers/